Why we must learn how to accept uncertainty

James Perry, Editing Director

Artificial intelligence is creeping into all walks of life as its potential uses are explored. Despite the many advantages such technology could bring, adoption into commercial aviation has been slower than elsewhere. We explore the reasons why, and how this can change.

In 2022, OpenAI released the first public version of ChatGPT [1]. The name easily rolls off the tongue nowadays, and few readers would not be familiar with it. Casting one’s mind back two years shows how much the world has changed in such a short amount of time. Two years ago, there was no way to put something on paper except by writing it yourself. There was no way to solve a bug in your code except by searching Stack Overflow and hoping somebody else had already solved the problem for you. And there was no doubt about the uniqueness of the creativity of mankind. A computer could never be an artist, at least not until 2022.

Daily life feels the same. The conveniences of AI for students are generally met with higher expectations, just as calculus is still as challenging since the advent of calculators. More websites have AI chatbots, autocomplete is better, and there has been an increase in faked hyper-realistic videos online – but probably what AI hasn’t changed is that you still have to wake up early for your commute! Below the surface, however, the uptake of AI has been stark. 2023 saw a near-doubling in the prevalence of words such as “commendable”, “intricate” and “meticulous” in scientific publications, all of which are more commonly used by generative AI than human authors. A University College London study [2] estimated over 1% of papers were at least partially written by AI that year, despite nearer to 0.1% disclosing it. Taking a probabilistic approach, researchers at Stanford University [3] found numbers much higher, up to 17.5% in computer science. Some papers even have phrases like “as an AI language model” left in! Other than that, however, the wide range of estimates shows how hard it is to tell when exactly AI has been used or hasn’t. The point is that AI use is widespread in academia and beyond. When it is impossible to detect whether an action is human or machine-driven, it is futile to argue the world has not fundamentally changed.

The rise of automation

Humans are troublesome. Almost since the dawn of powered flight, automation has been creeping into aviation to help overcome human limitations, the one element of an aircraft that we cannot design. Especially in emergencies, pilots face a high workload in a stressful environment. Automation can help to relieve that workload, allowing pilots to focus on making safe decisions. It is difficult, however, to find examples of when computers have saved lives – an aircraft proceeding without an accident isn’t newsworthy.

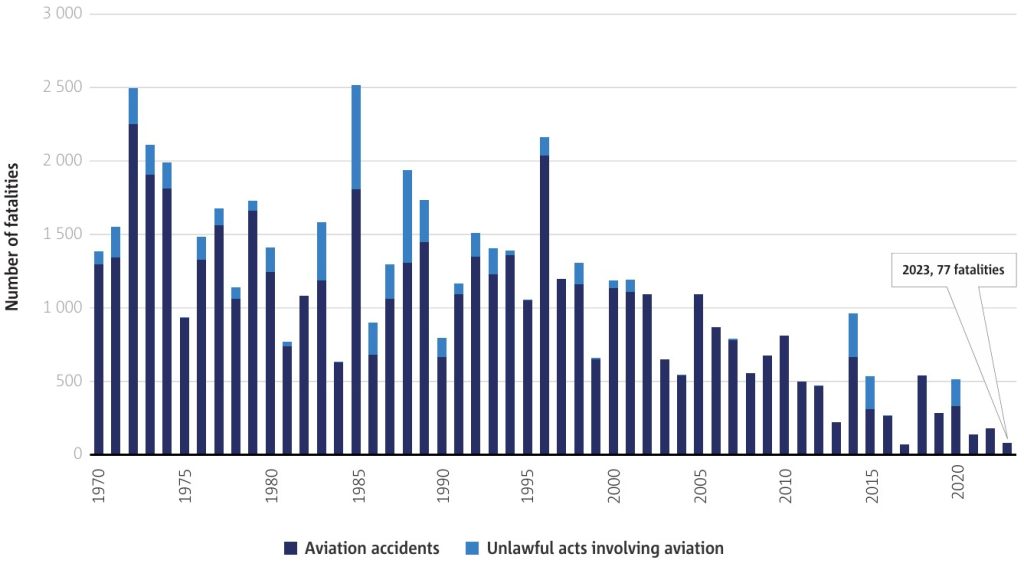

However, over the last few decades, automation has heavily contributed to the incredible improvement in aviation safety, as shown by Figure 1. In 2023, there were 77 fatalities in commercial and cargo operations worldwide, none of which involved airliners, down from a peak of over 2,500 in 1980 [4]! Compared to the nearly 4.5 billion passenger journeys [5], that’s a 1 in 1.7×10-18 chance. This means last year you were 80 times more likely to be struck by lightning than to die when you flew [6]. Only 4% of serious incidents over the last 5 years were caused by system or component failure, avionics-based or otherwise [4]. Automation has led to many tragic accidents, including the infamous MCAS system onboard Lion Air Flight 610 and Ethiopian Airlines Flight 302, which the Leonardo Times has already discussed to a great extent. Such accidents are so noteworthy because the reliability of aircraft systems is usually so high, making it shocking when things go wrong.

By contrast, flight crew error was the most common cause of fatal accidents over the last five years [4]. This was often caused by confusion or mismanagement of challenging circumstances, such as thunderstorms or minor technical failures, ultimately leading to loss of control. One benefit of automation is that it does not get tired or confused. A computer system can process more information than a human, faster, considerably longer, and with almost perfect accuracy. The quantity of data generated by aircraft continues to increase. The average commercial aircraft produces 20 terabytes of data from sensors in each engine alone [7]! The human mind can consciously process the equivalent of 120 bits of data per second [8], so it would take 400 people ten years to sift through all the information just one engine produces in an hour. That’s before considering all the other aircraft systems, from hydraulics to electrical power, pneumatics to air conditioning. Modern aircraft cannot be flown by humans alone; we need computers.

TU Delft’s Center for Excellence in AI for Structures is investigating the potential to use data from acoustic waves for composite structural health monitoring [9]. This involves attaching piezoelectric transducers to an aircraft’s structure, allowing the signals to be interpreted and the health of the structure determined. This has great potential to reduce preventative maintenance, as components can be replaced based on their actual state, which saves money and is better for the environment. Imagine the sheer quantity of data an aircraft would produce when the entire structure is monitored!

Up until now, automation has always been a tool for human pilots. It has already made the positions of radio operators, navigators, and most recently flight engineers, redundant. Pilots can get the information they need at the push of a button, which once required an extra crew member. EASA classifies AI applications into three categories [10]: Level 1 – assistance to a human; Level 2 – human-AI teaming; and Level 3 – advanced automation, either overridable or not. The latter would permit Single-Pilot Operations, which EASA is slowly pursuing in the face of several safety concerns. Whether this stage is reached or not, AI can greatly improve current autopilots, allowing them to cope with far more challenging situations than traditional control systems can [11].

Anything above level 2 automation requires a different approach to the current automated systems. The computer is no longer a tool, but a flight crew member capable of making its own decisions, which may disagree with the human pilots, for better or worse. The computer acts independently, under human supervision, in the interest of the safety of the aircraft. This is a huge change in how we use automation – it’s like letting ChatGPT reply to all your emails and just checking them periodically. Probably a bad idea at the moment, but it could save so much time for more important things. The software requires slight improvement – so what’s the barrier?

Engineering is Hard

The impressive safety record of commercial aviation is only maintained by strict safety regulations, which include certification requirements for all new technologies. Usually, this is a fairly straightforward matter – simply determine what system performance is safe and ensure by testing that this safe behaviour is maintained throughout the aircraft’s lifespan. More bureaucratically, manufacturers must demonstrate compliance with the legislature through specified acceptable means. This is most publicly visible in destructive structural testing; videos of aircraft wings being bent until failure can be easily found online. The testing process is rigorous, from full-system flight tests down to individual components. Everything is tested and has a standard to meet to pass the test and be certified as safe to fly.

AI systems must be tested similarly if integrated into aircraft. They must be demonstrated to work exceptionally safely under all conditions the aircraft will encounter, potentially facing increased scrutiny in light of public scepticism. But AI is notoriously difficult to test. Software is usually tested through white-box testing, verifying all paths through the program work as intended, and black-box testing, verifying only that the correct inputs lead to the correct outputs. Black-box testing is the only method for traditional neural networks, [12]. The network learns patterns in data and, while we can inspect the nodes and weights, we usually don’t understand exactly how an AI system reaches the answers it does. All that can be seen is the correct answer being generated for the input given. This is not good enough! AI in aircraft systems will not be faced with a finite number of discrete, testable scenarios, but rather a huge influx of continuous data from a range of sometimes erroneous sources. It is impossible to exhaustively test the response to every condition that the AI might face, especially if it is allowed to continue learning while in use. It may work once, but how can we be certain it will work every time? Safety standards will not budge for the sake of convenience.

There is also the question of accountability, which has been raised many times for self-driving cars. When a self-driving vehicle crashes, who is to blame? The manufacturer or the human supervisor? And, if the responsibility is shared, to what extent? How advanced does AI have to be before the software itself is blamed, just as if it were human? Attributing responsibility is important for the justice system but also before accidents even occur. Clear accountability ensures people know what they are responsible for and act accordingly. Where the blame can’t be traced, there is always the option not to care, not to do anything about a problem.

Once the manufacturer has developed an AI system, its use case must also be considered. Just because it looks good on paper does not mean it will see success in the real world. Pilots need to be comfortable supervising and operating an AI system. They must understand its decision-making process and challenge or override it if necessary. They should not rely on it without alternatives and should be able to troubleshoot the system like any other in the event of a malfunction. How do you figure out what’s gone wrong with an abstract network of mathematical weights?

Communication Matters

It is also dangerous for pilots to be overly cautious. An example of this is Air France Flight 447, which in 2009 crashed into the Atlantic Ocean en route from Rio de Janeiro to Paris, sadly killing all 228 on board [13]. Pitot tube icing caused the flight computer to receive inconsistent inputs, which caused it to disengage the autopilot and flight envelope protection, reverting manual control to the pilot flying, First Officer Pierre-Cédric Bonin. The avionics functioned as intended, the pilots simply needed to fly the aircraft manually. However, the pilots were not used to flying manually at altitude, where handling characteristics are quite different from nearer to the ground, and the A330 entered a pilot-induced oscillation. Although this was recovered from, Bonin had also unintentionally begun to pitch the aircraft up and it started to stall. None of the three pilots acknowledged the stall, which was indicated to them as designed by stall warnings and buffeting. The computer trusted them with control, so they thought they knew best.

One can argue that the concept of human error is only a myth. Maybe there is no such thing, but rather the failure of a complete system which includes humans in the loop. Of course people will make mistakes, it’s in our nature, so systems and procedures must be designed to prevent our failings from leading to such consequences. While the flight computer in this example behaved as programmed, how it was programmed was not suited for this situation. The aircraft was fully stalled for three and a half minutes, which should have been ample time for three experienced flight crew to recover, or at the very least decrease the angle of attack below the 35° at which it remained. They did not do this, because the computer considered its airspeed indications unreliable at such high angles of attack and temporarily deactivated the stall warning. This meant that the stall warning resumed when the angle of attack reduced, so the pilots were afraid to do so. The system designers didn’t consider a situation where the aircraft could be at such a high attitude and the pilots did not notice that they were stalled, so the computer did not communicate this information to the pilots in control [13].

This story highlights the vital importance of accurate and efficient human-machine interaction, but also trust. Bonin did not trust the computer from the moment of the icing, despite its otherwise normal function. If an automated system is untrusted and so ignored or even overridden, the resulting situation can be even less safe. The whole system must be assessed, both human pilots and automated systems together. The safest outcome does not rely on perfecting either element individually, but rather on the system as a whole and the cooperation between these two players.

Source: Trust Me

So, how do we avoid the advent of AI systems leading to such accidents, whether through genuine fault or miscommunication? XAI stands for “eXplainable Artificial Intelligence”, and refers to any AI systems which explain how they reached an output. This is vital for improving trust in AI systems and installs confidence and power in humans to enable them to tell whether a mistake has been made. This may be interactive such that humans submit a text-based query and receive a written explanation, but could also be graphical displays or plots showing the model mapping from input to output, or confidence that the output is correct.

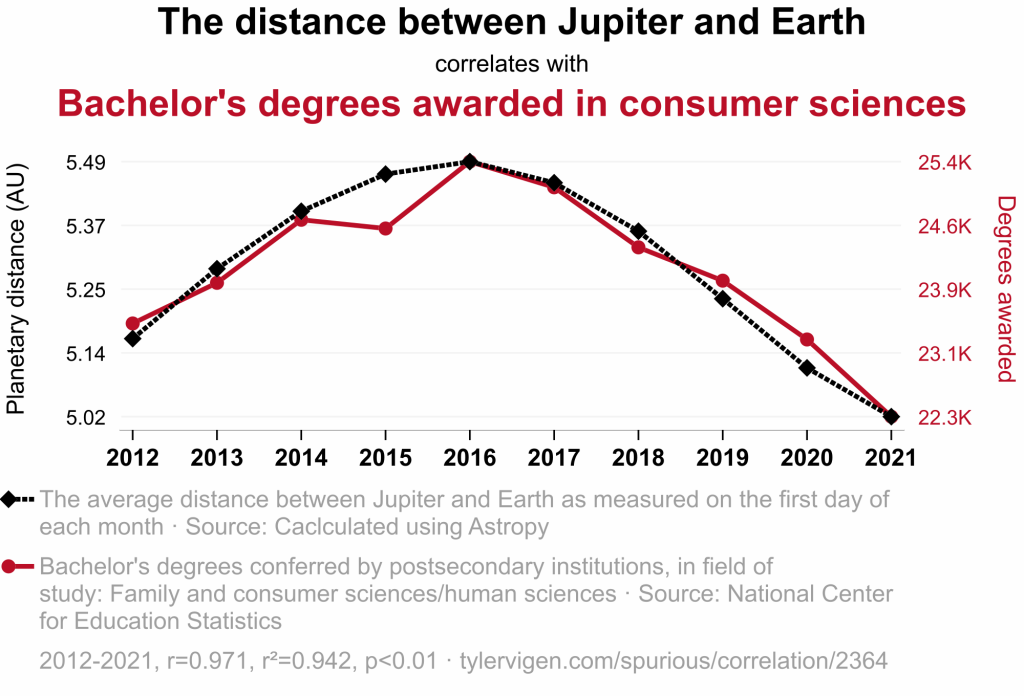

Often, a grey-box method is proposed, where the inner workings of a model can be partially understood while retaining the high performance that pure white-box systems fail to achieve. In addition to model interpretability and post-hoc explainability, XAI should justify the data being used as input. A model may be sound based on an existing correlation, passing all the grey-box tests, but if the correlation does not make real-world sense then it is foolish to trust it. Tyler Vigen has a website of spurious correlations, one such example being how the distance between Jupiter and Earth correlates with bachelor’s degrees awarded in consumer science, see Figure 2 [14]. The website also includes a five-page AI-generated paper explaining a farcical study and leading to these results! It’s all nonsense, but if you couldn’t tell, how could you know whether to believe it? A written excuse is insufficient by itself, only full consideration of the input data and model process provides a proper explanation.

Even in this context, the complete system should not be ignored. Interpreting the XAI results is yet another potential for misunderstandings, and well-placed trust can only be achieved with a total understanding of the system’s abilities, limitations and methods. Sutthithatip et al. [12] even suggest a need for dedicated training and certification for XAI users in aerospace; the risk of misuse and mistrust is of such great concern. XAI is surely the way forward, but there is so much variance within the field it is hard to tell which safest, most effective solution will emerge. Previously, aviation has learned from tragic accidents, but with standards so high we cannot afford anything but to get AI right the first time.

The three levels of automation described by EASA are a subset of the complete possibilities of human-supervised automation, the most extreme of which is that the “computer does the whole job, if it decides it should be done, and informs human, if it decides human should be told” [15]. It becomes clear that trust will eventually go both ways, depending on whether the computer believes the human can improve the situation. This conjures memories of the science-fiction novel “2001: A Space Odyssey”, in which the computer HAL kills the crew to protect its mission. But, consider the implications when an AI’s objective is to preserve life instead. As Air France Flight 447 has shown, humans don’t always know best. If it is probabilistically the safest option not to involve humans in decision-making, it is difficult to argue why we should be.

Conclusion

Despite rapid progress in the last few years, AI still faces major hurdles before it can enter safe use in commercial aviation. It is challenging to build trust in a system you don’t understand, which is why XAI is vital to this transition. It is common for articles such as this to ask whether you would put your life in the hands of a computer, but consider that they would only ever be certified to the highest standards of provable safety. Perhaps the harder question is: why do you trust your life in the hands of humans?

References

[1] Marr, B. (2024, February 20). A short history of ChatGPT: How we got to where we are today. Forbes. Retrieved December 19, 2024, from forbes.com

[2] Gray, A. (2024). ChatGPT “contamination”: estimating the prevalence of LLMs in the scholarly literature. arXiv (Cornell University). doi.org/10.48550/arxiv.2403.16887

[3] Liang, W., Zhang, Y., Wu, Z., Lepp, H., Ji, W., Zhao, X., Cao, H., Liu, S., He, S., Huang, Z., Yang, D., Potts, C., Manning, C. D., & Zou, J. Y. (2024). Mapping the increasing use of LLMs in scientific papers. arXiv (Cornell University). doi.org/10.48550/arxiv.2404.01268

[4] EASA. (2024). Annual Safety Review 2024.

[5] Statista. (2024, October 11). Global air traffic – scheduled passengers 2004-2024. Retrieved December 19, 2024, from statista.com

[6] Holle, R. L. (2016). The number of documented global lightning fatalities [Journal-article]. In 24th International Lightning Detection Conference & 6th International Lightning Meteorology Conference.

[7] Pohl, T. (2015, February 19). How big data keeps planes in the air. Forbes. Retrieved December 19, 2024, from forbes.com

[8] Your Brain is Processing More Data Than You Would Ever Imagine. (2023, August 29). Minecheck. Retrieved December 19, 2024, from minecheck.com

[9] Moradi, M., Gul, F. C., & Zarouchas, D. (2024). A Novel machine learning model to design historical-independent health indicators for composite structures. Composites Part B Engineering, 275, 111328. doi.org/10.1016/j.compositesb.2024.111328

[10] EASA. (2023). EASA Artificial Intelligence Roadmap 2.0. easa.europa.eu

[11] Demir, G., Moslem, S., & Duleba, S. (2024). Artificial intelligence in Aviation Safety: Systematic review and biometric analysis. International Journal of Computational Intelligence Systems, 17(1). doi.org/10.1007/s44196-024-00671-w

[12] Sutthithatip, S., Perinpanayagam, S., Aslam, S., & Wileman, A. (2021). Explainable AI in aerospace for enhanced system performance. 2015 IEEE/AIAA 34th Digital Avionics Systems Conference (DASC), 1–7. doi.org/10.1109/dasc52595.2021.9594488

[13] Oliver, N., Calvard, T., & Potočnik, K. (2018, March 5). The tragic crash of flight AF447 shows the unlikely but catastrophic consequences of automation. Harvard Business Review. Retrieved December 19, 2024, from hbr.org

[14] Spurious correlation #2,364. (n.d.). Tyler Vigen. Retrieved December 19, 2024, from tylervigen.com

[15] Ali, S., Abuhmed, T., El-Sappagh, S., Muhammad, K., Alonso-Moral, J. M., Confalonieri, R., Guidotti, R., Del Ser, J., Díaz-Rodríguez, N., & Herrera, F. (2023). Explainable Artificial Intelligence (XAI): What we know and what is left to attain Trustworthy Artificial Intelligence. Information Fusion, 99, 101805. doi.org/10.1016/j.inffus.2023.101805